All Formats & Editions

1 - 1 of 1 results found

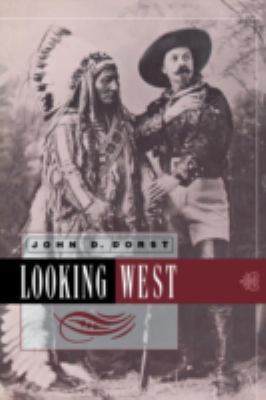

Looking West

Edition Description

The American West is a region, perhaps more than any other in the United States, that comes to us in visual terms. The grand landscapes, open vistas, and magisterial views have made the act of looking a defining feature of how we experience the West as an actual place. In...

Edition Details

Format:Paperback

Language:English

ISBN:0812214404

Format: Paperback

Condition:

$

33.81

50 Available